Quick summary

- AI Personalization Grocery Delivery means showing each shopper what they’re likely to buy next, not blasting everyone with the same deals.

- Good personalization turns routine buys into repeat revenue: smarter suggestions raise conversion and nudge higher baskets.

- Start with first-party signals (order history, cart actions, search); they beat bought lists every time.

- Use hybrid recommenders (collaborative + content-based) and rerank by inventory and delivery speed so suggestions are actually shippable.

- Metrics that matter: conversion lift, average order value (AOV), repeat-purchase rate, and substitute acceptance.

- Quick wins: “people also bought” on product pages, reorder prompts in app, and one-click substitutes at checkout.

- Common traps: recommending out-of-stock items, over-personalizing to the point of creepiness, and ignoring per-store assortment.

- Next step: pick one metric, run a short A/B test, and build the simplest model that moves that needle.

Introduction

AI Personalization Grocery Delivery is what happens when your app knows you well enough to suggest the yogurt you forget every other week and gets the prediction right. It’s not magic; it’s pattern-matching at scale: tiny signals from clicks, carts, and past orders turned into useful nudges. When those nudges land, carts grow.

But personalization can misfire. Show the wrong product at checkout and you annoy a customer; show the right one and you look like a mind reader. So the real work is less about clever models and more about clean data, sensible business rules, and respect for the user’s space. Curious which parts matter most?

This article walks through what works (and what doesn’t), the metrics that prove impact, and a practical rollout plan you can test in weeks, not quarters. Read on for a roadmap that helps you increase orders without sounding desperate.

AI Personalization’s Impact on Grocery Delivery

A recent McKinsey report found that companies getting personalization right typically see a 10–15% revenue uplift, with leaders outperforming competitors by as much as 40%. That’s not a minor tweak; it’s proof that tailored recommendations directly move the revenue needle, especially in digital commerce, where competition is fierce.

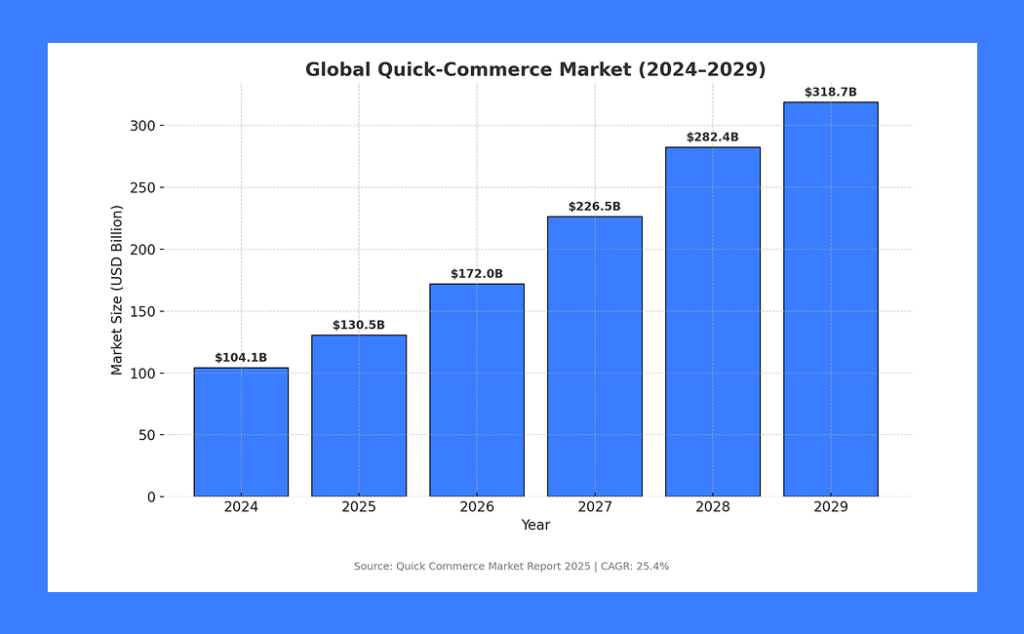

Zooming out to the industry level, the Quick Commerce Market Report 2025 values the global quick-commerce sector at US$104.1 billion in 2024, on track to hit US$130.51 billion in 2025 and a staggering US$318.67 billion by 2029. That translates to a CAGR of 25.4%, showing just how fast customer expectations for near-instant delivery are reshaping the grocery landscape.

Put the two insights together and you see the opportunity: personalization isn’t just a nice feature, it’s a multiplier in a market growing by hundreds of billions. For any grocery app, the real question is simple: Will you ride that wave with AI Personalization Grocery Delivery, or leave orders (and loyalty) on the table?

Why does personalization matter for grocery delivery?

People don’t shop grocery lists the same way they browse electronics. Grocery choices are routine, time-sensitive, and habit-driven. Personalization converts those tiny daily decisions into opportunities: replace friction with convenience and you earn repeat behavior.

Recommendation systems suggest the right substitutes when items are out of stock. They nudge complementary buys (olive oil with pasta). They remind a user to reorder pet food at the exact moment the dog’s bowl looks low if your algorithms are tuned properly.

How AI-powered grocery recommendations actually work

Recommendation systems for grocery look simple on the surface, “you might also like…” but under the hood, they’re a mix of data, models, business rules, and operations. The point is practical: help shoppers find what they want faster, recover lost sales when items are out, and nudge ancillary buys without annoying the customer.

Core modelling approaches

- Collaborative filtering (CF): finds patterns in user–item interactions. If shoppers A and B often buy the same cereals, CF will surface what B bought that A hasn’t seen yet. It’s fast to deploy and powerful for cross-sells, but cold-starts (new users or products) are a limitation.

- Content-based models: recommend items that share attributes with what the user liked before, brand, organic labels, and dietary tags. These work well for niche preferences (gluten-free, vegan).

- Hybrid systems: combine CF and content signals; a hybrid balances popularity with personalized taste and reduces cold-start gaps.

- Sequence & session models: use the order of clicks or cart additions. If someone adds pasta, then sauce, session-aware models can optimize for that immediate context.

- Contextual bandits & reinforcement approaches: choose which recommendation variant to show in real time to maximize a short-term objective (clicks or conversion) while still exploring new options. These are useful when you want to personalize promotions or test new placements dynamically.

Practical engineering stack

A working stack usually has these pieces:

- Feature pipeline/data warehouse: unified user profiles, SKU metadata, historical purchases, timestamps, and location tags.

- Model training: batch jobs that create embeddings or ranking models; start weekly and increase frequency as needed.

- Feature store & online store: store precomputed features for low-latency lookups (user vectors, item vectors, inventory flags).

- Serving layer: a low-latency API that returns ranked recommendations, with a business-rule reranker layered on top.

- Instrumentation & experiments: A/B framework, logging, and dashboards.

- Ops & monitoring: alerting on latency, error rates, and mismatches between predicted and actual inventory.

Reranking and business rules

Model scores are rarely final. Before UI display, recommendations are reranked to enforce margins, avoid perishables in certain promos, and ensure local availability. This is where product strategy meets ML. The model suggests; business rules protect the customer experience and the P&L.

Addressing common technical problems

Cold-start? Use category-level popularity and content signals for new SKUs or anonymous users. Noisy SKUs? Consolidate and canonicalize product variants. Inventory mismatch? Integrate per-warehouse stock and add a freshness score to prefer recently verified items. Privacy concerns? Keep PII out of model features where possible and provide clear opt-outs.

Read more: The Blueprint Behind India’s Quick Commerce Giants

Metrics that prove personalization is working

Good recommendations change behavior. To know if they do, you need both business KPIs and model-level metrics, plus a feedback loop that ties the two together.

Business-facing KPIs

These show whether personalization moves money or loyalty:

- Conversion rate (session → order): the most direct signal that recommendations helped close a sale.

- Average order value (AOV): cross-sells and bundles should lift this.

- Repeat purchase rate/retention: Personalization aims to make customers come back more often.

- Order frequency: an increase here often precedes higher lifetime value.

- Substitution acceptance rate: percent of times a recommended substitute is accepted when the primary item is out of stock. A high value means useful recommendations.

- Fulfillment success/fill rate: recommendations should not increase failed fulfillments; if they do, remove risky items from the surface.

- Incremental revenue & payback: measure the extra dollars attributable to personalization and calculate months-to-payback versus the investment.

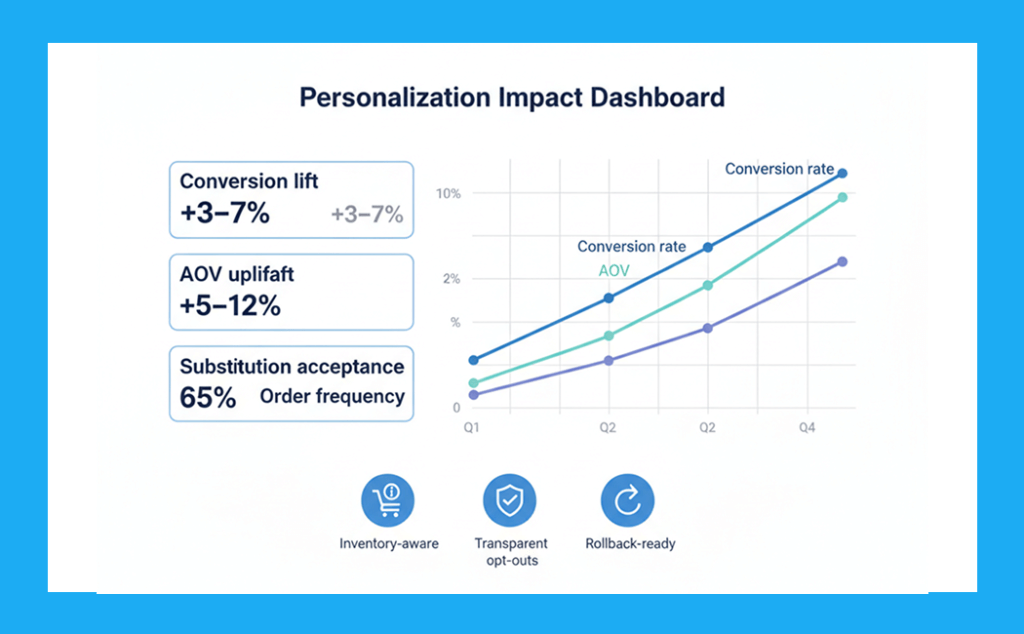

Targets vary by business, but a common benchmark is low-single-digit conversion lifts and mid-single-digit AOV uplifts for early wins; larger, sustained lifts justify scale investment.

Model & product metrics

These measure the health and quality of the recommender itself:

- Click-through rate (CTR) on recommended items: indicates engagement but must be balanced against conversion and repeat effects.

- Diversity & novelty: avoid recommending the same few best-sellers to everyone; diversity prevents fatigue.

- Calibration: predicted probabilities should match observed outcomes—if the model is overconfident, experiment outcomes may surprise you.

- Latency & error rates: technical reliability is non-negotiable for live commerce.

Experimentation & attribution

- A/B testing: the gold standard. Randomize users (or sessions) to control and treatment and measure business KPIs over a representative period. Always predefine the primary metric (e.g., conversion lift, AOV).

- Holdout & incrementality tests: to measure true causal impact, keep a holdout group that never sees personalization and compare long-term LTV and retention.

- Attribution windows: use appropriate windows (e.g., 7-, 14-, 30-day) to capture delayed conversions typical in grocery.

Monitoring & guardrails

Running models without safety nets is risky. Implement:

- Real-time alerts for negative movement in conversion, retention, or an uptick in support tickets related to recommendations.

- Quality checks: daily “sanity” reports for substitution acceptance and out-of-stock rates.

- Automated rollbacks if experiments show net harm to retention or AOV.

- Bias & fairness checks to ensure recommendations don’t systematically disadvantage categories or neighborhoods.

Coordinate closely with product and your Grocery delivery App Development team to ensure events are instrumented correctly, logging is complete, and experiment assignments are enforced across front-ends and backend services.

Putting it into a dashboard

A pragmatic dashboard ties model outputs to business impact:

- Top panel: conversion rate, AOV, and order frequency (trend lines).

- Middle panel: CTR on recs, substitution acceptance, and in-stock percentage for shown items.

- Bottom panel: model health precision 10, latency, and error rate.

Include cohort filters (new vs. returning, location, device) so you can see where personalization works best, and add annotation layers to record when campaigns or inventory pushes happened; those events often explain sudden spikes or dips.

Implementation checklist: start small, measure fast

Pick one clear use case to win first

Begin with a single, well-scoped goal. Don’t try to personalize the whole app on day one. Choose an area where you can measure impact quickly product-page related items, cart cross-sells, or a reorder reminder. Focus produces a signal: you’ll see whether customers respond before you commit engineering time. An MVP recommender that surfaces a short list of items is often enough to prove value.

You can learn from platform plays: the Instacart Business Model shows how product discovery and promoted placements at scale can be monetized, but you only want that after you’ve proven the lift on a small surface.

Quick actions:

- Launch 6–8 recommendations on product or cart pages.

- Use business rules to block poor matches (e.g., perishables in promos).

This approach keeps the project nimble while still showing measurable lift from AI-powered grocery recommendations.

Data plumbing and hygiene

Good models start with good data. Consolidate order history, SKU definitions, clickstreams, and local inventory into a single view. Clean titles, map variants to canonical products, and ensure timestamps and location tags are accurate. If inventory isn’t reliable, recommendations will regularly fail the buyer, and failure erodes trust faster than a slow rollout ever will.

MVP design and rollout

Build the simplest useful pipeline: batch-train an item-embedding model, serve nearest-neighbor recommendations, and rerank by in-stock signals. Early on, batched scoring and periodic refreshes work fine; you can graduate to streaming if the lifts justify the cost. Keep the UX simple: provide visible reasons for suggestions, offer an easy way to dismiss, and provide an opt-out option for personalized offers. This is a practical path to Machine learning grocery delivery features without overbuilding.

Experimentation and metrics

Design experiments so results are decisive. Test one variable at a time: placement, model, or copy track conversion lift, AOV, repeat rate, and substitution acceptance. Watch operational signals too: recommendations serve latency, mismatch errors, and out-of-stock complaints. If a variation improves clicks but hurts repeat purchases, that’s a red flag. Short-term engagement is not the same as long-term loyalty.

Avoid these common mistakes

Over-personalizing feels creepy

Personalization should help, not stalk. If every screen is a bespoke pitch, customers may pull back. Use personalization sparingly for promotions and prioritize utility reorder reminders, smart substitutes, and complementary items. When you run AI-powered grocery recommendations, tune frequency and surface simple explanations like “Suggested because you ordered X last month.” That transparency reduces friction and keeps suggestions useful rather than invasive.

Recommending things you can’t deliver

A recommendation that can’t be fulfilled is worse than no recommendation. The model must be inventory-aware and locality-aware: what’s available in one micro-fulfillment center might not be in the next. For Machine learning grocery delivery, this means reranking by local stock and delivery feasibility before anything hits the UI. Operational integration of live feeds between inventory, routing, and the recommender is mandatory, not optional.

Treating personalization as a single feature

Personalization succeeds only when product, data, ops, and marketing align. Put cross-functional ownership in place and budget for ongoing data ops and monitoring. Without that, models are deployed and then ignored, the short-term wins evaporate, and technical debt grows.

Cost vs return

Cost buckets

Costs scale with ambition. An MVP can run on open-source libraries, a small cloud instance, and a couple of engineers. Scaling to per-store models and low-latency serving raises infrastructure and staffing bills. Leaders add real-time pipelines and dedicated data teams, which is where costs rise materially.

Estimating ROI and payback

Think in incremental revenue: small percentage lifts in AOV or order frequency compound across thousands of orders. A simple formula is useful: incremental revenue baseline AOV × order volume × lift%. Compare that to additional spending on infrastructure, people, and promotional costs to estimate payback months. Many teams aim for 6–12 months’ payback on major personalization investments.

Reduce cost and accelerate returns

You don’t need real-time for everything. Start with batched recommendations and prioritize high-impact spots. Reuse existing data stores rather than building parallel systems. Limit costly personalization to high-value cohorts and monetize placed inventory with sponsored listings once the baseline lift is proven.

For businesses operating at a neighborhood level, Hyperlocal Quick Commerce dynamics can shorten payback times: because orders are more frequent and fulfilment windows shorter, modest lifts in AOV or frequency convert to faster revenue gains.

Conclusion

AI personalization for grocery delivery is a multiplier: better suggestions increase AOV; timely reminders raise frequency; local awareness reduces friction. Put data hygiene and inventory sync first, then tune models slowly. Want the lift? Treat personalization as product work, not just a marketing trick.

If you’re ready to move from pilots to scale, consider investing in a production recommender and integrating it into your checkout and push channels. For teams that need help turning ideas into a working product, Grocery delivery App Development services can bridge engineering, data science, and ops to ship reliable personalization that grows orders and loyalty.

FAQs

Personalization places the right items in front of the right shopper at the right moment, reorders, complementary buys, and timely reminders all lift conversion. Small, well-targeted nudges (think: a reorder reminder for pet food) usually move the needle more than broad promotions.

If you start small, one surface, one test, expect initial signals in weeks and reliable results in 6–12 weeks. Early wins come from simple cross-sells; larger retention effects take longer and need holdout groups to prove incrementality.

Use first-party data: order history, clicks, basic preferences, and local inventory. Keep personally identifiable information out of model features when possible, show users why a suggestion appears, and offer an opt-out to stay on the right side of privacy and trust.

They can, unless you rerank by local inventory and delivery feasibility first. The trick is operational integration: inventory, routing, and the recommender must share the same truth so suggestions are actually fulfillable.

Track conversion lift, AOV, repeat rate, and substitution acceptance; then compare incremental revenue to the cost of infra, people, and promotions. If your tests show consistent AOV or retention gains, personalization usually pays back within months, especially for neighborhood-level quick commerce where order frequency is high.